Modern digital experiences demand instant gratification, yet technical limitations create unavoidable delays. The solution lies in masterfully orchestrated audio and visual feedback that transforms waiting into engagement.

🎭 The Psychology Behind Perceived Performance

Human perception operates on fascinating principles that savvy designers exploit to create seemingly instantaneous experiences. Our brains don’t process reality in real-time; instead, they construct a continuous narrative from discrete sensory inputs. This cognitive quirk provides the foundation for sophisticated delay masking techniques that have revolutionized modern interface design.

Research in cognitive psychology demonstrates that users perceive systems as faster when they receive immediate feedback, even when actual processing times remain unchanged. The critical threshold sits around 100 milliseconds—any response within this window feels instantaneous. Beyond this point, strategic sensory feedback becomes essential for maintaining the illusion of responsiveness.

The concept of “perceived performance” differs dramatically from actual performance metrics. A system that responds in 300 milliseconds with engaging feedback often feels faster than one completing tasks in 200 milliseconds with no intermediate communication. This counterintuitive reality drives the entire discipline of sensory feedback design.

🎵 Acoustic Architecture: Designing Sound for Interaction

Audio feedback serves as the invisible thread connecting user actions to system responses. Unlike visual elements that compete for attention within crowded interfaces, sound occupies a unique sensory channel that can communicate status, progress, and completion without demanding direct focus.

Effective audio design for delay masking follows specific acoustic principles. Sounds must be brief enough to avoid annoyance during repeated interactions, yet distinctive enough to register consciously. Frequency selection matters tremendously—mid-range tones between 1000-4000 Hz typically provide optimal recognition without causing fatigue.

The temporal structure of feedback sounds creates powerful psychological anchors. A crisp initial transient signals immediate system acknowledgment, while sustained or evolving timbres can mask processing delays of several seconds. Designers often employ rising pitch contours to suggest progression and activity, creating anticipation that makes waiting feel purposeful rather than frustrating.

Layering Sonic Complexity

Advanced audio feedback systems employ multiple layers that activate sequentially as delays extend. The initial click or tap sound confirms input registration within milliseconds. If processing continues beyond 300 milliseconds, a secondary ambient loop begins, indicating ongoing activity. Upon completion, a distinct resolution sound provides closure and reward.

This three-tier approach aligns perfectly with human expectation curves. Users tolerate brief silences after immediate acknowledgment, accept extended processing when accompanied by active signals, and experience satisfaction from clear completion indicators. The entire sonic architecture operates below conscious analysis while profoundly influencing perceived system quality.

👁️ Visual Choreography: Motion as Communication

Visual feedback patterns leverage our innate sensitivity to movement and change. Static interfaces feel lifeless and unresponsive, while thoughtfully animated elements create the impression of living, reactive systems. The challenge lies in designing motion that informs rather than distracts, guides rather than overwhelms.

Progressive disclosure through animation masks processing time by creating narrative structure. A button that morphs into a loading indicator, then expands to reveal results, tells a visual story that makes delays feel like intentional pacing. Users experience a journey rather than a wait, transforming dead time into engaged anticipation.

Skeletal screens represent a revolutionary approach to visual delay masking. Rather than showing generic spinners, these interfaces display content placeholders that mirror the structure of incoming information. Users see the page “assembling itself” in real-time, creating the perception that loading happens faster than traditional methods, even when actual timing remains identical.

The Physics of Perceived Speed

Animation timing curves dramatically affect perceived responsiveness. Linear motion feels mechanical and cheap, while easing functions that accelerate quickly and decelerate gradually suggest physical momentum and quality. The standard ease-out curve (decelerating motion) particularly excels at making interactions feel snappy and responsive.

Duration matters as much as motion style. Animations between 200-400 milliseconds hit the sweet spot—long enough to register as smooth and intentional, short enough to avoid feeling sluggish. Faster animations risk appearing jittery, while slower ones drain away the energy that makes interfaces feel alive.

🔄 Synchronized Multisensory Experiences

The true power of delay masking emerges when audio and visual feedback synchronize into cohesive sensory symphonies. Isolated feedback channels provide utility, but coordinated multisensory patterns create immersive experiences that transcend the sum of their parts.

Temporal alignment between sound and motion proves critical. Audio should trigger precisely as visual motion begins, creating unified perception of a single event. Misaligned feedback—sound arriving even 50 milliseconds before or after visual change—breaks immersion and highlights system latency rather than masking it.

Amplitude and intensity should correlate across sensory channels. Subtle visual transitions pair with quiet, gentle sounds, while dramatic animations warrant more pronounced acoustic accompaniment. This natural correspondence mirrors real-world physics, where larger movements generate louder sounds, making digital interactions feel grounded in physical reality.

Cross-Modal Sensory Substitution

Advanced implementations use one sensory channel to compensate for limitations in another. When visual complexity prevents clear animated feedback, rich audio cues fill the communication gap. Conversely, in sound-sensitive environments, purely visual patterns convey the same information through alternative means.

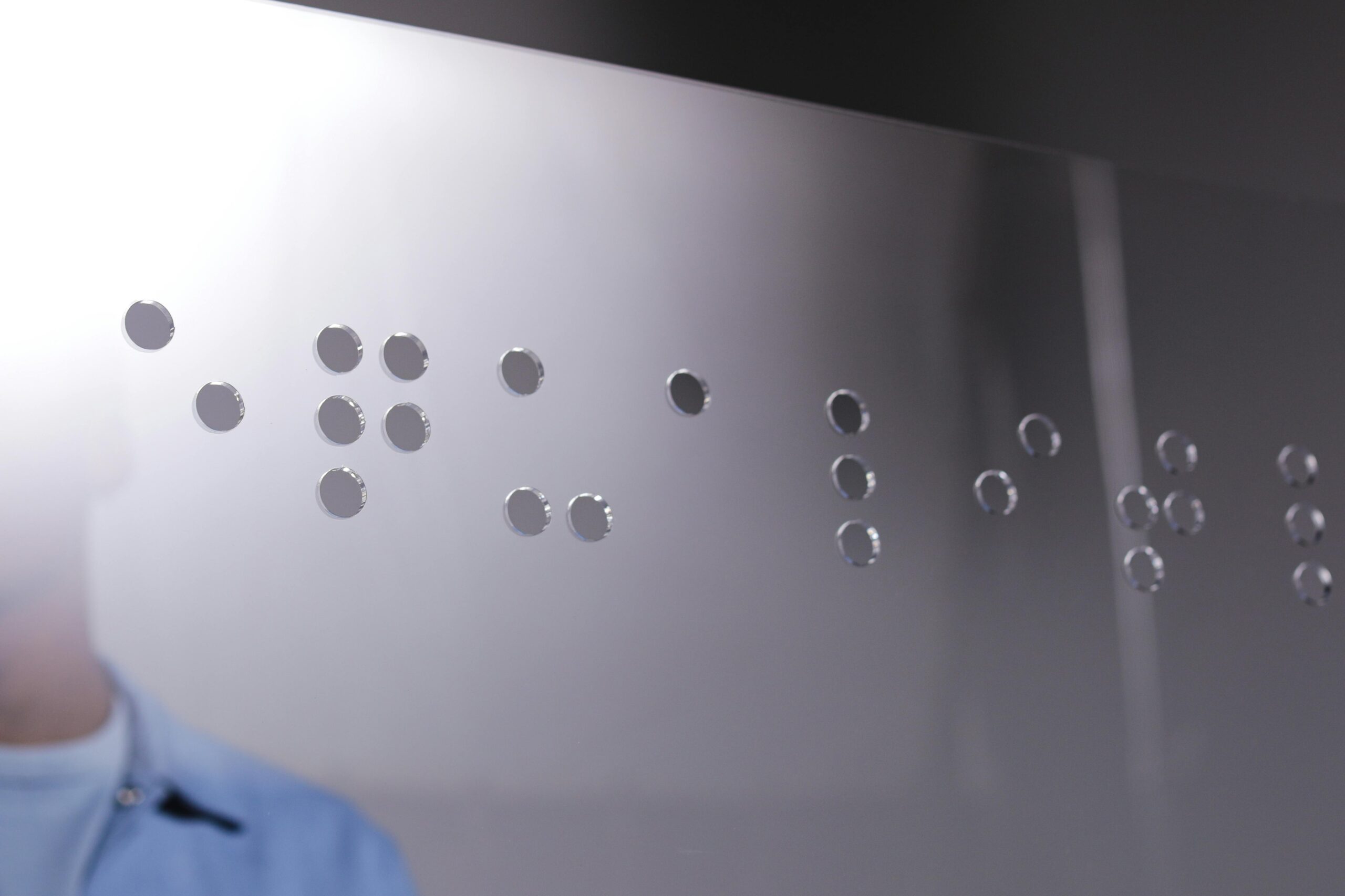

This redundancy provides accessibility benefits while enhancing robustness. Users with visual impairments rely on audio feedback, while those with hearing challenges depend on visual cues. Designing both channels to independently convey complete information ensures universal usability without sacrificing sophistication.

📊 Pattern Libraries: Building Blocks of Feedback Systems

Establishing consistent feedback patterns across applications creates learned expectations that reduce cognitive load. Users internalize these sensory vocabularies, enabling instant recognition and interpretation without conscious effort. This familiarity accelerates interaction while making systems feel polished and professional.

Common interaction categories each warrant distinct feedback signatures. Selection actions (taps, clicks) require immediate, percussive responses. Continuous inputs (scrolling, dragging) benefit from sustained, flowing feedback. State changes demand clear transitional cues. Error conditions need distinctive, attention-grabbing patterns that clearly differ from success signals.

| Interaction Type | Audio Pattern | Visual Pattern | Duration |

|---|---|---|---|

| Input Confirmation | Sharp click (50ms) | Button press animation | 100-150ms |

| Processing Indicator | Ambient loop | Progressive spinner/skeleton | Variable |

| Success Completion | Rising chime | Checkmark + fade | 400-600ms |

| Error State | Dissonant buzz | Shake + red highlight | 300-500ms |

⚡ Real-Time Adaptation: Intelligent Feedback Systems

Static feedback patterns cannot optimally serve all scenarios. Network conditions fluctuate, device capabilities vary, and processing complexity changes dynamically. Intelligent systems adjust feedback strategies in real-time, matching communication intensity to actual delay duration and context.

Adaptive feedback employs threshold-based escalation. Operations completing within 100 milliseconds receive minimal acknowledgment—perhaps only a subtle visual shift. Delays extending to 500 milliseconds trigger intermediate feedback layers. Beyond one second, systems deploy full sensory experiences including animated progress indicators and ambient sound loops.

This graduated approach prevents feedback fatigue during fast operations while ensuring adequate communication during extended delays. Users never wonder about system status, yet fast interactions remain clean and uncluttered by unnecessary embellishment.

Contextual Awareness

Sophisticated implementations consider environmental context when selecting feedback strategies. Mobile applications detect ambient noise levels and adjust audio feedback volume accordingly. Systems recognize accessibility settings and emphasize appropriate sensory channels. Time-of-day awareness enables quiet modes during typical sleeping hours.

User behavior patterns inform feedback customization over time. Machine learning algorithms identify individual preferences and tolerance thresholds, gradually tuning sensory responses to match personal expectations. This invisible personalization makes systems feel increasingly natural and responsive through continued use.

🎮 Gaming the Wait: Engagement Through Interactivity

The most sophisticated delay masking techniques transform waiting periods into micro-engagement opportunities. Rather than passively observing progress indicators, users interact with feedback systems themselves, creating active participation that makes delays feel shorter and more tolerable.

Pull-to-refresh interactions exemplify this principle perfectly. The physical gesture of dragging downward, combined with progressive visual feedback showing accumulated “potential energy,” transforms loading into gameplay. Users feel agency and control, dramatically reducing frustration despite unchanged actual loading times.

Gestural feedback systems expand this concept further. Allowing users to manipulate loading animations through touch, rotate skeleton screens, or trigger variations in ambient sounds creates engagement loops that occupy attention during processing delays. These micro-interactions provide just enough stimulation to prevent impatience without distracting from core tasks.

🛠️ Implementation Strategies and Technical Considerations

Translating sensory design theory into production code requires careful technical planning. Audio systems must handle multiple simultaneous sounds without distortion or latency. Visual animations need hardware acceleration to maintain smooth frame rates across diverse devices. Synchronization mechanisms ensure audio-visual alignment despite independent rendering pipelines.

Web technologies like the Web Audio API provide low-latency sound playback with precise timing control. CSS animations and transitions offer hardware-accelerated visual effects with minimal performance overhead. Request Animation Frame APIs enable smooth custom animations synchronized to display refresh rates.

Mobile platforms present unique challenges and opportunities. Native audio systems provide lower latency than web equivalents, enabling tighter feedback loops. Haptic feedback adds a third sensory dimension particularly powerful on touchscreen devices. Platform-specific gesture recognizers enable sophisticated interaction patterns that feel native and polished.

Performance Optimization

Feedback systems must never contribute to the delays they aim to mask. Audio files require optimization—compressed formats, appropriate sample rates, and preloading strategies prevent playback delays. Visual assets need similar attention—vector graphics, sprite sheets, and texture atlases reduce loading overhead.

Lazy loading and progressive enhancement ensure feedback systems activate only when needed. Simple interactions receive lightweight responses, while complex operations justify richer sensory experiences. This tiered approach maintains performance while enabling sophisticated feedback where it matters most.

🌐 Cross-Platform Consistency and Adaptation

Modern applications span multiple platforms, each with distinct interaction paradigms and user expectations. Effective feedback strategies balance consistency with platform-appropriate adaptation. Core sensory principles remain constant while implementation details respect platform conventions.

Desktop interfaces traditionally emphasize visual feedback with optional audio cues. Mobile platforms integrate touch, sound, and haptics more equally. Voice interfaces rely almost entirely on audio feedback with minimal visual components. Web applications must function across this entire spectrum, detecting capabilities and adapting accordingly.

Progressive enhancement provides the solution framework. Base implementations ensure functional feedback across all platforms. Enhanced layers activate when additional capabilities become available. This approach guarantees universal usability while enabling optimized experiences on capable devices.

🔮 Future Horizons: Emerging Feedback Paradigms

Advancing technology continually expands the palette of available feedback mechanisms. Haptic systems evolve beyond simple vibration toward nuanced tactile communication. Spatial audio creates three-dimensional soundscapes that guide attention and convey complex information. Augmented reality interfaces blend digital feedback with physical environments.

Artificial intelligence enables predictive feedback that anticipates user needs before explicit requests. Systems learn individual usage patterns and preemptively load resources, reducing delays to near-zero while maintaining engagement through subtle confirmation cues. This proactive approach represents the ultimate evolution of delay masking—eliminating delays entirely while maintaining responsive communication.

Neurological interfaces represent the distant frontier where feedback systems might bypass traditional sensory channels entirely. Direct neural stimulation could communicate system status with unprecedented speed and clarity. While such technologies remain largely experimental, they illustrate the continuing quest for ever-more-seamless human-computer interaction.

🎯 Measuring Success: Metrics That Matter

Quantifying feedback effectiveness requires metrics beyond simple timing measurements. User satisfaction surveys provide subjective quality assessments. Task completion rates and error frequencies reveal whether feedback successfully guides interaction. Engagement duration during delayed operations indicates whether masking strategies maintain attention.

A/B testing different feedback patterns reveals preferences and optimal configurations. Heatmaps and interaction recordings show how users respond to various sensory cues. Analytics tracking perceived speed versus actual performance quantifies the effectiveness of masking techniques.

Long-term retention and return rates ultimately validate feedback design quality. Systems that feel responsive and engaging retain users despite technical limitations. Poor feedback experiences drive abandonment regardless of underlying performance. These business metrics connect sensory design directly to organizational success.

🎼 Orchestrating the Perfect Sensory Experience

Creating masterful audio-visual feedback systems requires balancing numerous competing considerations. Responsiveness, aesthetics, performance, accessibility, and platform conventions all demand attention. The most successful implementations find elegant solutions that satisfy multiple requirements simultaneously through thoughtful, holistic design.

The art of delay masking transcends technical implementation to become psychological design. Understanding human perception, expectation, and tolerance enables creation of experiences that feel faster than reality. Coordinated sensory feedback transforms unavoidable delays from frustrating obstacles into seamless components of engaging interactions.

As digital experiences continue evolving, the principles of sensory feedback design remain constant. Immediate acknowledgment, progressive communication, and satisfying completion form the foundation of responsive-feeling systems. Whether implemented through sound, motion, haptics, or technologies yet imagined, these core concepts will continue shaping the future of human-computer interaction. The symphony plays on, always refining, always improving, always striving for that perfect harmony between human expectation and technical reality. 🎵

Toni Santos is a dialogue systems researcher and voice interaction specialist focusing on conversational flow tuning, intent-detection refinement, latency perception modeling, and pronunciation error handling. Through an interdisciplinary and technically-focused lens, Toni investigates how intelligent systems interpret, respond to, and adapt natural language — across accents, contexts, and real-time interactions. His work is grounded in a fascination with speech not only as communication, but as carriers of hidden meaning. From intent ambiguity resolution to phonetic variance and conversational repair strategies, Toni uncovers the technical and linguistic tools through which systems preserve their understanding of the spoken unknown. With a background in dialogue design and computational linguistics, Toni blends flow analysis with behavioral research to reveal how conversations are used to shape understanding, transmit intent, and encode user expectation. As the creative mind behind zorlenyx, Toni curates interaction taxonomies, speculative voice studies, and linguistic interpretations that revive the deep technical ties between speech, system behavior, and responsive intelligence. His work is a tribute to: The lost fluency of Conversational Flow Tuning Practices The precise mechanisms of Intent-Detection Refinement and Disambiguation The perceptual presence of Latency Perception Modeling The layered phonetic handling of Pronunciation Error Detection and Recovery Whether you're a voice interaction designer, conversational AI researcher, or curious builder of responsive dialogue systems, Toni invites you to explore the hidden layers of spoken understanding — one turn, one intent, one repair at a time.